In product management today there is a strong tendency to preach going with hard data to support your reasoning before making an investment. Further, we should being making the most incremental bets in order to validate assumptions before committing too much investment in potential waste.

These are all good intentions and practices, but I want to make sure everyone is aware that we must trust our gut from time to time. It is our product intuition that eventually takes us from our insights to a plan of action. We will never have perfect data to tell us exactly what features to build and how to design them.

Case in point. To get started with the build-measure-learn loop of Lean Startup, you need to start somewhere. Start with your gut — then build something minimal to test your predictions (aka hypotheses).

Testing My Gut in Practice

Over the last couple weeks we have been running user tests of the Ask Me Anything capability of Chainlinq. Definitely exciting to have people actually interacting and using it for the first time.

The testing was designed to validate our functional scope and usability of the current product. We have lots more planned, including design improvements, but right now we are focused on getting core functionality in place while achieving our UX objectives.

Previously, I have built various prototypes to validate different assumptions. However, this is the first time we are able to test working software with users.

As we scoped and designed the product from the beginning, we use our research into customer needs, design patterns, and technical know-how to help scope and prioritize work. However, while we use these inputs we are also making subjective decisions on scope and design that have to be tested. Trusting our gut.

We learned so much very quickly in these limited tests. I contemplate these results with two questions.

- Is the scope and design correct for now?

- How important is it?

Is the scope and design correct for now?

We decided to test the current scope and design of the product to learn from our customers. We predicted users would observe some missing features and some specific usability issues. However, through testing, we are also able to discover some of the design choices we made did not meet our user objectives. We also learned about some defects that were previously unknown.

How important is it?

We scoped and designed the current release under assumptions of importance to helping users achieve some objectives in the product. True, these assumptions are built on a lot of prior research and analysis but having users actually in the product to validate them is enormously valuable.

Analyze the Outcomes

Ultimately, asking these questions helps us decide what to do next. We have a basic strategy that allows for learning and adjustment along the way. So analyzing this test data is the tool that helps us do just that.

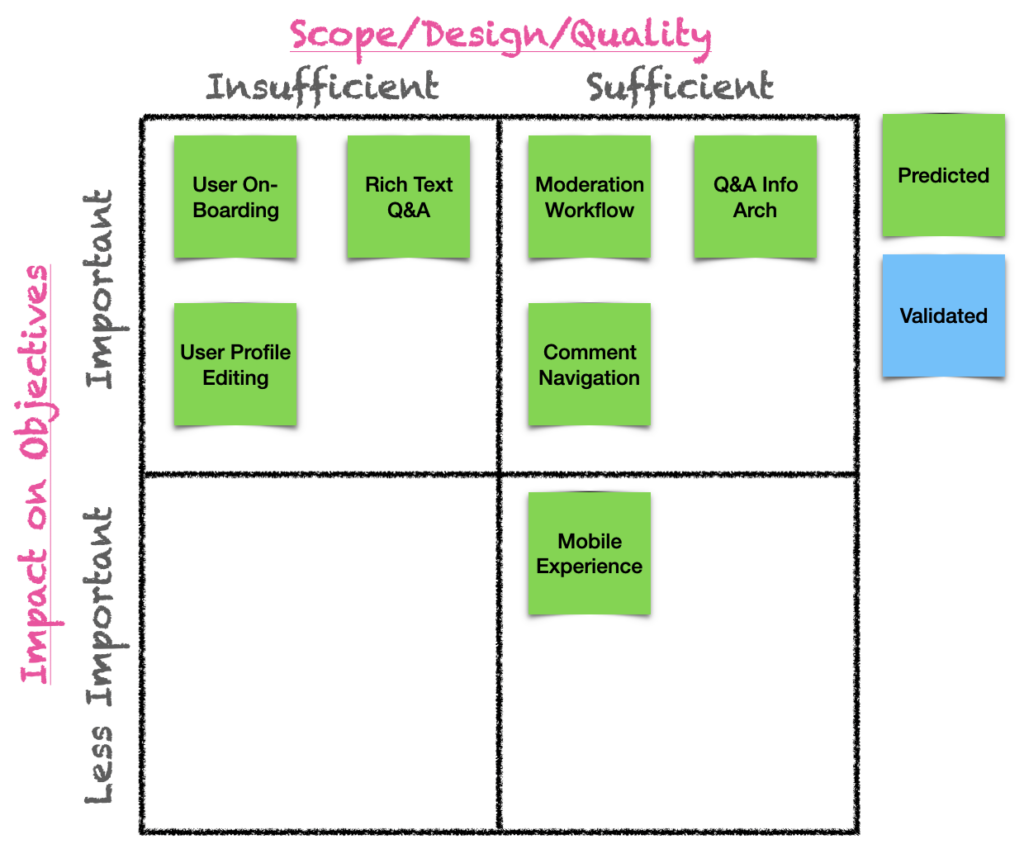

For simplicity I mapped these on a simple 2×2 matrix that is kind of the next evolutionary step beyond

Assumption Mapping. With one dimension covering the importance of the fact/assumption to current objectives. The second dimension describes whether the current design (scope or quality) is sufficient as-is.

Below is a sample of predictions before the testing on the left and after testing on the right.

It is important to make predictions before testing because it helps you to craft your test and further develop your ability to make better predictions in the future.

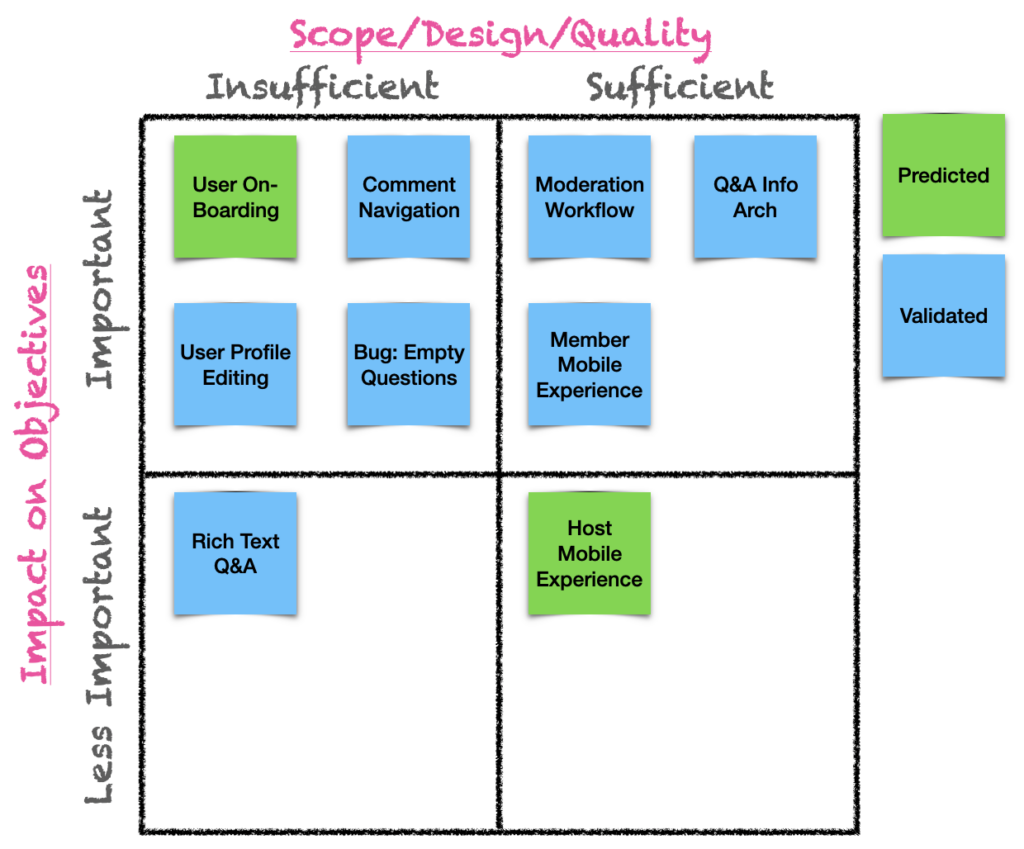

After testing we can see that some predictions were validated and others did not have enough data to validate.

Pre-test Predictions

Post Test Validated Results

From this limited example you can see we learned quite a bit, including:

- Critically we proved some critical information architecture and workflow concepts that are a major building block to this capability.

- I predicted that simple text might prove too limiting. While we got requests for supporting richer text, it was not viewed as a limiter to users meeting their objectives and was not viewed as necessary. So while this is still insufficient to some, the importance was dropped.

- Bugs appeared that we previously were not aware of and could not have predicted.

- Mobile experience for members became more important. In testing we found a surprising number of members automatically chose to access the product via their mobile device despite us, intentionally, not telling them it works there. While it worked sufficiently there it means we need to not allow its priority in our future work to slip.

- As these tests were self-hosted it is unclear if Guest and Moderators will have the same affinity for mobile. I still predict it will be lower importance as most Guests and Moderators will make the time to sit in front of their computer if hosting an event.

- Comment Navigation was not where we expect it.

How does this feed our learning loop?

What this tells us is that some of our decisions around design were good for this point in our journey. It also tells us that our Comment Navigation design was badly broken. Before the tests we had some idea that it was not great. However, our hope was that through testing we could find just a few minor tweaks to improve it.

Rather than putting in all the effort on existing ideas for minor changes beforehand, we made the smart decision to just get it out there. Now we have clear evidence that we need to make a bigger design change.

While this will slow us down from hitting our next objectives, ignoring this critical finding would be counter-productive. So instead we slow down now to go faster later.

Conclusion

I am not going to ever say that your Product Intuition is always right. In fact, in my example here I show it being sometimes right and sometimes wrong. The important takeaway, however, is that you must use your gut to get over the hurdle of not having data or not having the time to get data frequently.

It is a critical part of being a great product manager. Once you learn to rely on your gut to get you unstuck, don’t forget to validate your predictions. Only when you forget this last step do you fall into trouble.

In the past I have written on related topics including:

-

Product Execution versus Discovery – In that article I describe scenarios where the problem and/or solution are clear and you just need to execute as well or better than your competition.

-

Better & Quicker Product Decisions – How to build confidence on making decisions.

-

Developing Product Intuition – Making good decisions where there is a lack of hard data is a skill you can develop. I provide some techniques to do just that.